Author: Richard

Date Posted: Dec 4, 2023

Last Modified: Dec 4, 2023

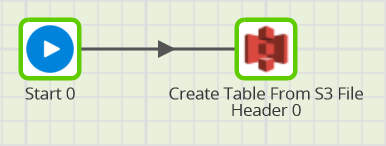

Create Snowflake Table From CSV file

Create a Snowflake table from a staged CSV file.

Call this shared job from a Matillion ETL orchestration job to create a permanent, relational table with columns inferred from a CSV file in S3 cloud storage.

The shared job reads the header row of the S3 file, using pandas to find the column names. It automatically create a staging table with these column names.

Afterwards use the S3 Load component to load the file into the new staging table.

This is an alternative to the S3 Load Generator, and can handle files programmatically. Combine with an iterator to dynamically create staging tables for many S3 files, to avoid having to manually use the S3 Load Generator wizard for each file.

Parameters

| Parameter | Description |

|---|---|

| s3_bucket_name | Name of the S3 bucket |

| s3_filename | Name of the file |

| s3_csvfile_metadata | Leave blank |

| s3_stg_table_name | Name of table to create |

Prerequisites

To avoid a ModuleNotFoundError, the following Python libraries must be available to the Python 3 interpreter:

- boto3

- pandas

- datetime

- re

This shared job attempts to read and write to S3. Ensure that the EC2 instance credentials attached to your Matillion ETL instance include the privilege to do this. For more information, refer to the “IAM in AWS” section in this article on RBAC in the Cloud.

Downloads

Licensed under: Matillion Free Subscription License

- Download METL-aws-sf-1.68.3-create-snowflake-table-from-csv.melt

- Platform: AWS|Azure|GCP

- Target: Snowflake

- Version: 1.68.3 or higher