Author: Matillion

Date Posted: Nov 16, 2023

Last Modified: Nov 22, 2023

Cloud Storage AWK

Run an AWK script against a file held in cloud storage.

This shared job reads one file from cloud storage, passes it through the AWK script provided, and saves the results to a specified cloud storage target.

AWK scripts are great for many pre-processing tasks on textual, line oriented data files such as CSV and TSV. However they are not suitable for semi-structured formats such as JSON, AVRO, ORC, PARQUET or XML.

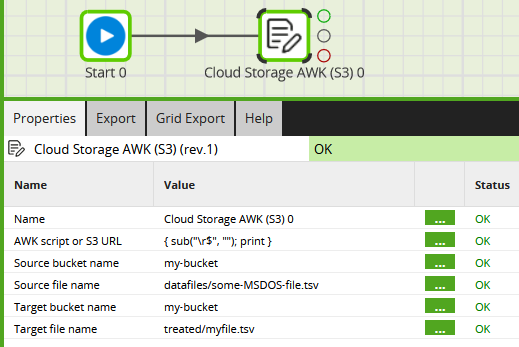

This screenshot is an example using S3 on AWS, with an inline AWK script specified directly among the parameters:

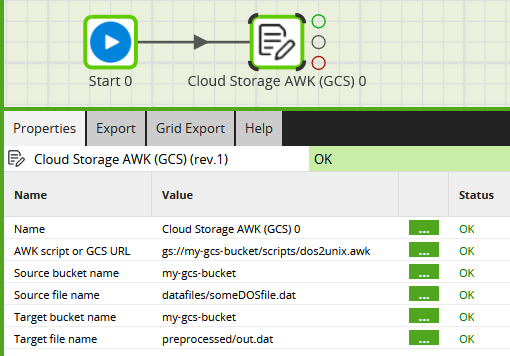

This screenshot is an example using GCS on GCP, with the AWK script downloaded from the specified cloud storage URL:

Parameters

| Parameter | Description |

|---|---|

| AWK script or URL | The AWK script. If this parameter begins with s3:// or gs:// the shared job will try to download and use it |

| Source bucket name | Name of the S3 bucket containing the source file. Do not include the s3:// or gs:// prefix. Do not include the object path |

| Source file name | The source file name, including path if any |

| Target bucket name | Name of the target S3 bucket. Do not include the s3:// or gs:// prefix. Do not include a path |

| Target file name | The target file name, including path if any |

Warning

The target file will be overwritten if it already exists.

Examples

Simple passthrough filter, does not change the input:

{print $0}

Remove blank lines:

NF>0 {print $0}

Remove the first row, useful to remove the header row from a CSV or TSV file:

NR>1 {print $0}

Remove two first two rows:

NR>2 {print $0}

Convert DOS encoded file (CRLF line endings) into a Unix/Linux file (LF line endings)

{ sub("\r$", ""); print }

Prerequisites

Obviously the shared job uses awk internally! It must be installed on the Matillion ETL instance.

When running on AWS:

- The

awscommand line utility must be installed on your Matillion ETL instance. If the shared job fails with an errorline X: aws: command not foundthen please follow this guide to installing the aws command. - This shared job attempts to read and write to S3. Ensure that the EC2 instance credentials attached to your Matillion ETL instance include the privilege to do this. For more information, refer to the “IAM in AWS” section in this article on RBAC in the Cloud.

When running on GCP:

- The

gsutilcommand line utility must be installed on your Matillion ETL instance. - This shared job attempts to read and write to GCS. Ensure that the Service Account attached to your Matillion ETL VM has the privilege to do this. For more information, refer to the “IAM in GCP” section in this article on RBAC in the Cloud.

Downloads

Licensed under: Matillion Free Subscription License

- Download METL-aws-1.61.6-cloud-storage-awk-s3.melt

- Platform: AWS

- Target: Any target cloud data platform

- Version: 1.61.6 or higher

- Download METL-gcp-1.61.6-cloud-storage-awk-gcs.melt

- Platform: GCP

- Target: Any target cloud data platform

- Version: 1.61.6 or higher