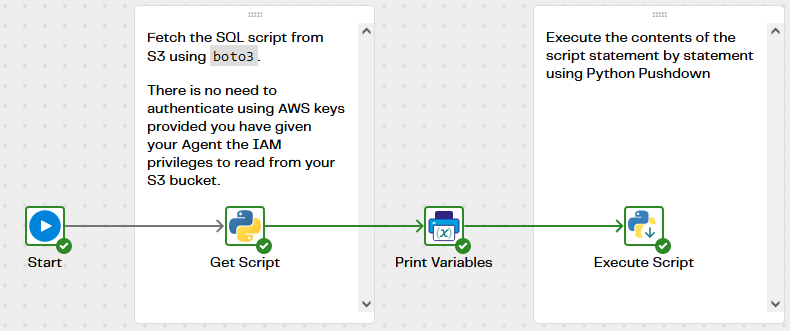

Run the contents of an externally managed SQL script with this Data Productivity Cloud pipeline.

This pipeline reads the contents of an externally managed SQL script - for example in S3 cloud storage - and executes the contents, statement by statement, using Python Pushdown.

You will find more background information in this article on Python Pushdown.

There are three components:

- Get Script - a Python component that fetches the contents of the script

- Print Variables - to display the script at runtime

- Execute Script - a Python Pushdown component that executes the SQL

Get Script

This component fetches the contents of the script using the boto3 Python library.

It’s an ordinary (non pushdown) Python component that runs on your Agent. There is no need to authenticate using AWS keys provided you have given your Agent the IAM privileges to read from your S3 bucket. For more background information on this subject please refer to “IAM in AWS” in this article on Role Based Access Control (RBAC) in the Cloud.

For AWS users the Python is as follows:

import boto3

s3 = boto3.client('s3')

response = s3.get_object(Bucket=p_bucket_name, Key=p_script_key)

context.updateVariable('prvt_script', response['Body'].read().decode('utf-8'))

Note that the bucket name and object path are taken from pipeline variables. If you want to run the script on its own you’ll need to provide default values for those two variables. If you run it by calling it from another pipeline (probably the normal use case) then leave the defaults blank and pass the values in the Run Orchestration component.

The Python code saves the contents of the script into another pipeline variable named prvt_script.

Print Variables - to display the script at runtime

This component is for audit and logging. It just prints the value of prvt_script.

Execute Script - a Python Pushdown component that executes the SQL

This component executes the SQL statements in the prvt_script variable, one by one.

It’s a pushdown Python component that runs inside Snowflake. This means the pushdown SQL runs most efficiently. The statements are run one by one from the script source treated as a stream object.

The script is as follows:

from io import StringIO

for cur in session.connection.execute_stream(StringIO(prvt_script)):

print("Running: ", cur.query)

for ret in cur:

print(ret)

Downloads

Licensed under: Matillion Free Subscription License

Download Run Externally Managed SQL Script - Snowflake - S3.orch.yaml

- Platform : AWS

- Target : Snowflake

Installation instructions

How to Install a Data Productivity Cloud PipelineAuthor: Matillion